Do you wanna know about Activation Function, its types and Which perform well in artificial neural networks? if yes, then give your few minutes to this article. In this article, I will tell you all details related to the Activation Function in an Artificial Neural Network.

Hello, & Welcome!

In this blog, I am gonna tell you-

- What is the Activation Function?

- Types of Activation Function.

- Example of how to choose activation function.

So, let’s get started-

What is the Activation Function?

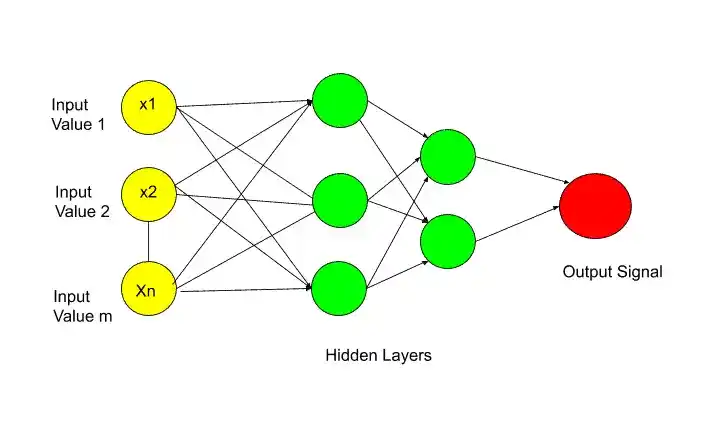

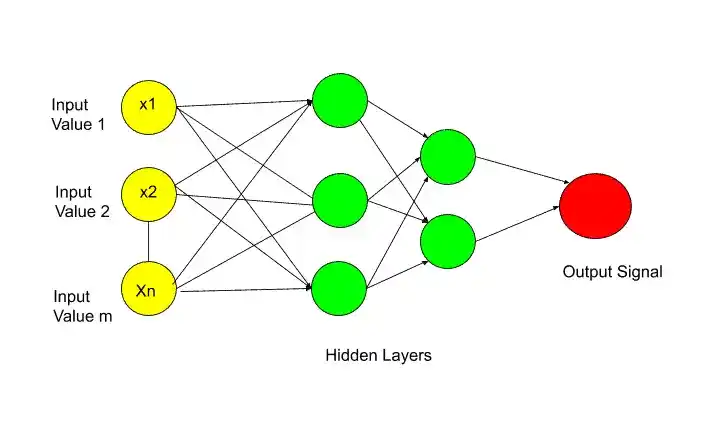

In simple words, The activation function generates an output based on input signals. In order to understand what activation function is, you need to understand the structure of the Neural Network. I have discussed all the details of the artificial neural network in the “Artificial Neural Netwrok- Neuron” article. But I am again going to discuss it with you so that you can understand easily.

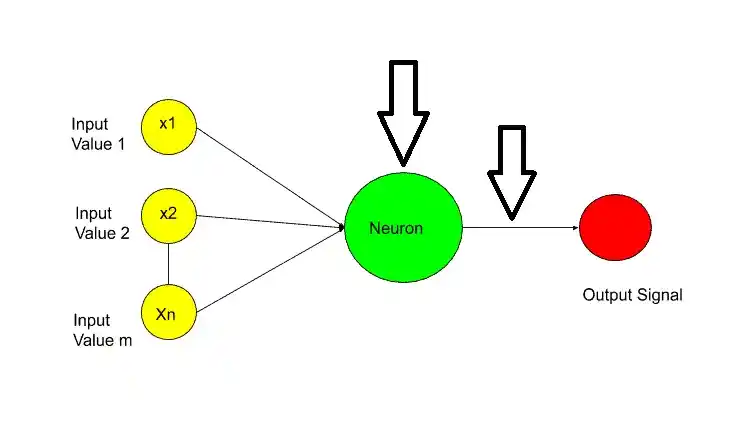

Here you can see that hidden layers are getting an input signal from the input layer. So What activation function does?. After getting an input signal from the input layer, in the hidden layer, we combine (Combining means weighted sum) them depending upon the nature of these input signals. And then they are sent to one function, and that function is the Activation Function. The activation function generates output from these input signals.

So the main purpose of the activation function is to generate the output based on the input signals. The activation function is performed on Hidden layers and in the output layer. As you can see arrows in the picture.

Now let’s move to the types of the activation function.

Types of Activation Function.

There are several types of Activation Functions, but I will discuss only the most popular and used activation function.

So the most popular activation functions are-

- Threshold Function.

- Sigmoid Function.

- Rectifier Function.

- Hyperbolic Tangent(tan h)

- Linear Function.

1. Threshold Activation Function-

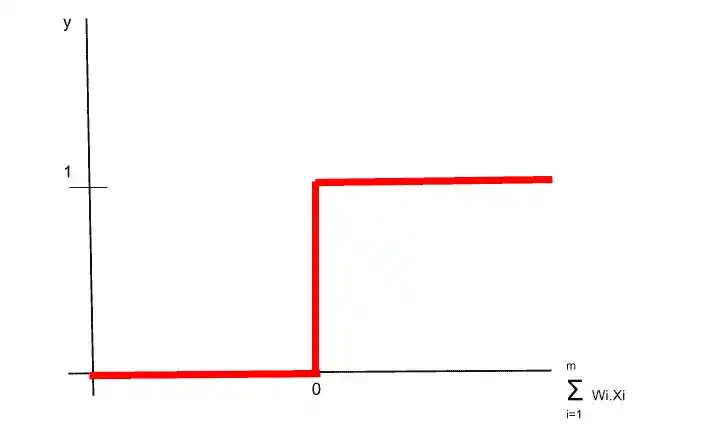

Threshold function looks something like that-

In the threshold function, on the X-axis, you have a weighted sum. And on the Y-axis you have the values between 0 and 1. Basically a threshold function is very simple kind of function. The formula of threshold function is-

φ (x)={ 1 if x>=0 and 0 if x<0}

According to the threshold function, if the value is less than 0, so the threshold function passes on 0. And if the value is greater than 0 or equal to 0, then the threshold passes on 1. The threshold function is a kind of Yes/ No function. It is a very straight forward function.

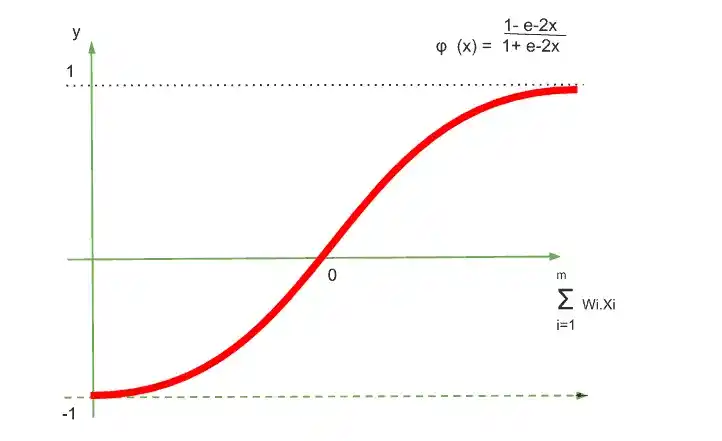

2. Sigmoid Function-

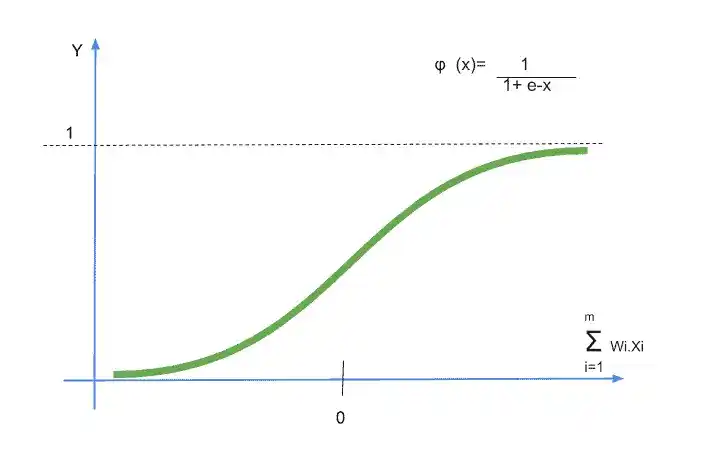

Sigmoid Function Looks something like that-

The formula of the sigmoid function is-

φ (x) = 1/ 1+ e-x

In that case, x is the value of the weighted sum. This is the function used in Logistic Regression.

So what is good about this sigmoid function is? It is smooth, unlike the threshold function it doesn’t have any kinks in the curve. It is a nice and smooth gradual progression. So anything below 0 is just like drop off and above 0 acts approximates towards 1.

The sigmoid function is very useful in the final layer that is the output layer. Especially when you are trying to predict the probabilities.

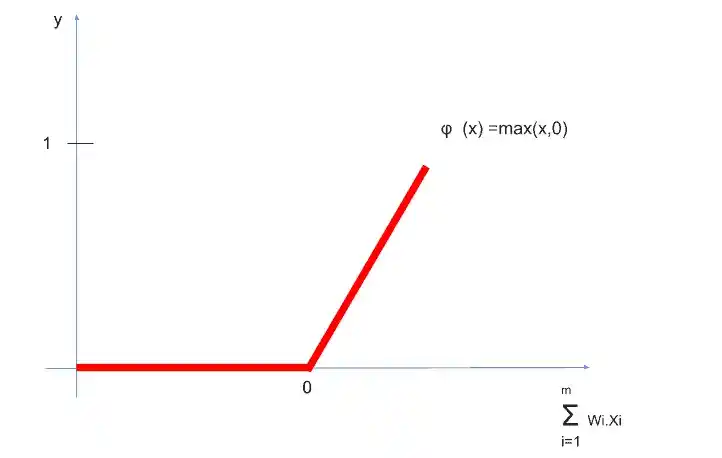

3. Rectifier Function-

Rectifier Function is one of the most popular functions in artificial neural networks even though it has a kink in the curve. The formula of Rectifier Function is-

φ (x) =max(x,0)

Rectifier Function goes all the way to 0 and then from 0 it’s gradually progressing as the input value increase.

In the hidden layer, mostly rectifier function is used.

4. Hyperbolic Tangent(tan h)-

Hyperbolic Tangent is very similar to sigmoid function but hyperbolic tangent function goes below 0. So the values go from 0 to 1 and from 0 to -1 on the other side. This can be useful for some applications.

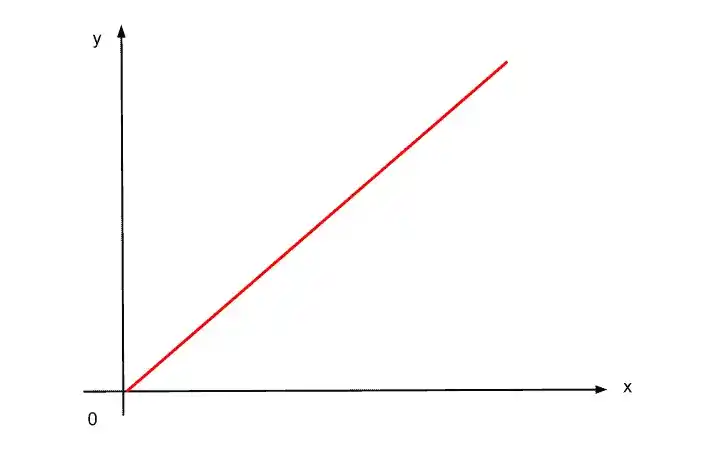

5. Linear Function-

Linear Function is very simple and easy without any conditions. The formula of a linear function is-

f(x) = a+x

Where a is bias, and x is a weighted sum. We get a linear representation( a straight line) as a result of this function. The advantage of a linear function is its simplicity.

Example of how to choose Activation Function.

Here I am gonna tell you the two cases and in each case which activation function you should choose.

Case 1- For Binary Variables

Suppose when your independent variable or input signal is in binary format (Y=0 or 1) which means in the form of 0 or 1. So in that case which activation function you should use?

So the answer is there are 2 options that you can approach with this. One is the Threshold Function because it is between 0 and 1. Therefore it fits properly and gives the result only in 0 or 1 form.

The second option is the Sigmoid Function. It is also between 0 and 1 just you need. But at the same time, you want just 0 or 1, therefore it is not exactly what you want. So in this case what you can do is just use it as the probability of Y being yes or no. And you can say that the sigmoid function tells you the probability of Y is equal to 1. Basically the closer you get to the top the more likely it is 1 or yes rather than a no or 0.

So that’s the two options that you can use if you have binary variables.

Case-2

Suppose you have a neural network that looks something like that-

Here, in the first layer, we have some inputs. They are sent off to our first hidden layer and then the activation function is applied. So usually the activation function applied mostly is Rectifier Function. After applying the Rectifier function the signals pass on to the output layer. And in the output layer, Sigmoid Function is applied. After that, you get your final output.

So this combination is very common in neural networks. That means –

Hidden Layer- Rectifier Function.

Output Layer- Sigmoid Function.

So, that’s all about Activation Function in an Artificial Neural Network. I hope now you have a clear idea about Activation functions and its most popular types. If you have any questions, feel free to ask me in the comment section.

Enjoy Learning!

All the Best!

Thank YOU!

Though of the Day…

‘ It’s what you learn after you know it all that counts.’

– John Wooden

Read Deep Learning Basics here

Written By Aqsa Zafar

Founder of MLTUT, Machine Learning Ph.D. scholar at Dayananda Sagar University. Research on social media depression detection. Create tutorials on ML and data science for diverse applications. Passionate about sharing knowledge through website and social media.

thank you 🌸

Most Welcome!