I recently completed the IBM Generative AI Engineering Professional Certificate on Coursera. In this IBM Generative AI Engineering Professional Certificate review, I’m sharing my real experience, what I learned, what stood out, and what you can expect from the program.

Generative AI is reshaping how developers create software. Yet many learners find it hard to go beyond using tools to actually build end-to-end AI applications. That’s why I joined this program: to gain hands-on experience in designing and deploying GenAI systems using Python, Flask, LangChain, and Hugging Face.

Subscribe to my YouTube channel for more videos on data science and AI: MLTUT YouTube Channel

After completing several projects and labs, here’s my honest take on the course, its strengths, challenges, and who it’s best suited for.

You can check the IBM Generative AI Engineering Professional Certificate on Coursera here to explore the full course structure and enrollment options.

Now, let’s start my IBM Generative AI Engineering Professional Certificate Review–

IBM Generative AI Engineering Professional Certificate Review

What the Program Offers

Provider: IBM (via Coursera)

Level: Beginner

Duration: Around 6 months (6 hours per week)

Structure: 16-course series

Rating: 4.7 (2,795 reviews)

Pace: Fully self-paced

The IBM Generative AI Engineering Professional Certificate is designed for learners who want to move beyond theory and start creating real GenAI projects. IBM developed this program to help beginners gain job-ready Generative AI engineering skills in less than six months, even without a technical background.

The learning journey begins with the basics of Python, AI, and deep learning, and gradually advances to hands-on GenAI development. You will explore how to build, fine-tune, and deploy Generative AI models for text, image, and natural language applications.

Each module combines interactive labs, coding exercises, and guided projects, allowing you to apply what you learn step by step. By the end of the program, you will have both the foundational knowledge and practical experience to start building your own GenAI applications using LangChain, Hugging Face, and other modern AI frameworks.

If you want to see the complete course list and requirements, visit the IBM Generative AI Engineering Professional Certificate page on Coursera here.

My Experience So Far

I joined this program because I wanted a structured and guided way to master Generative AI engineering through real practice, not just theory. I had already explored GenAI tools on my own, but I wanted a course that could connect everything, from fundamentals to practical deployment.

What impressed me right from the start was IBM’s strong focus on implementation. The lessons do not stop at explaining concepts. Each course includes interactive labs, coding notebooks, and guided projects that make you apply every topic you learn. This balance between learning and doing helped me understand how real GenAI workflows come together.

I also appreciated how the content builds progressively. The early modules focus on essential AI foundations and Python for AI, while the later ones move into hands-on GenAI projects using frameworks like LangChain and Hugging Face. This gradual structure made the entire learning path smooth and clear.

In the next part, I’ll share how each section of the program is designed and what kind of skills you actually develop as you move through it.

Course Breakdown

Course 1: Introduction to Artificial Intelligence (AI)

The first course, Introduction to Artificial Intelligence, gave me a solid starting point before moving toward Generative AI. It took around 12 hours to finish, and I liked how it started with the basics and then moved step by step into real examples.

At the beginning, the course explained what Artificial Intelligence means and how it is used in different areas like healthcare, finance, and education. The examples were clear and helped me understand how AI supports everyday tools and systems.

After that, the lessons introduced the main ideas behind machine learning, deep learning, and neural networks. I had some idea about them before, but the short lessons and visuals made it easier to understand how these models learn and make predictions. The mix of concept and real use was well balanced.

What I liked most was the part that talked about Generative AI in business. It showed how companies are using it to create products, improve customer support, and find new ways to work faster. This helped me connect the technology with real business value, which I found very useful.

There was also a small project where I had to design a simple Generative AI solution for a business challenge. It made me think about the ethical side of AI, such as fairness and data privacy. I appreciated that the course included this part because many beginner courses skip these important topics.

If I had to mention one area for improvement, I would say the course focused more on theory than coding. Still, as a first course, it worked well to build a clear base before moving into the technical modules.

Overall, this course helped me understand the core ideas of Artificial Intelligence and prepared me well for the next lessons in the program.

Course 2: Generative AI – Introduction and Applications

The second course, Generative AI: Introduction and Applications, helped me understand what makes Generative AI different from other types of AI. It took me about 8 hours to complete, and I liked how it explained every concept in a clear and practical way.

The course started by explaining how Generative AI works and how it is different from discriminative AI. The examples made it easy to understand the difference between models that create something new and models that only classify or predict. This clarity helped me build a strong base before moving to tools and applications.

Next, the lessons focused on real-world use cases. I learned how Generative AI is being used in content creation, code generation, image design, audio editing, and even video production. Seeing these examples helped me connect technical ideas with real industry applications. It gave me a sense of how widely GenAI is being adopted today.

The course also introduced some popular tools and models used for creating text, code, and visuals. I found it helpful that the explanations were simple and did not assume a strong technical background. It gave me a clear picture of what each model is meant for and how developers use them in different projects.

There was also an important section on Responsible AI. It covered how to use Generative AI responsibly, with a focus on transparency, accuracy, and ethical use. I appreciated this part because it reminded me that technical skill alone is not enough — understanding the responsible side of AI is just as important.

If I had to point out one limitation, I would say the course could have included more interactive exercises. Most of the content was theoretical, though it was well explained.

Overall, this course gave me a good understanding of how Generative AI is applied in different sectors. It connected the concepts from the first course with real-world possibilities and prepared me for the hands-on projects that came later in the program.

Course 3: Generative AI – Prompt Engineering Basics

The third course, Generative AI: Prompt Engineering Basics, was short but very practical. It took me around nine hours to complete and focused on how to write clear and effective prompts.

The course started with the basics of prompt engineering and why it is an important skill when working with Generative AI tools. I had used tools like ChatGPT before, but this course helped me see how the structure and wording of a prompt can change the final result.

The lessons showed different prompt patterns with examples. I liked how simple and direct the explanations were. Each example made it clear how a small change in phrasing can make the output more useful.

The course also covered best practices for writing prompts. I learned how to test, adjust, and improve them to get better results. These tips were practical and easy to apply in real tasks.

There was also a short overview of popular prompt engineering tools. It helped me understand what options are available for writing and testing prompts for both text and image generation.

If I had to suggest one improvement, it would be to add more exercises where learners can write and test prompts themselves. Most of the lessons were explanations, and a few hands-on examples would have made it even stronger.

Overall, this course was simple, clear, and helpful. It taught me how to think carefully before writing a prompt and how to guide a model more effectively. It also prepared me well for the next part of the program, where practical projects begin.

Course 4: Python for Data Science, AI & Development

The fourth course, Python for Data Science, AI & Development, was one of the most detailed parts of the program. It took me around 25 hours to complete and focused entirely on building a strong base in Python.

It started with the fundamentals of Python, such as syntax, variables, data types, and basic operations. The lessons were clear and easy to follow, even for beginners. I liked how each concept was explained step by step before moving to the next topic.

After the basics, the course introduced data structures, loops, functions, and object-oriented programming. The short coding tasks after each lesson made it easier to understand how these concepts work in practice.

Next, the course covered Pandas and NumPy. This was one of my favorite parts because it showed how to handle and analyze data efficiently. The use of Jupyter Notebooks made learning feel organized and hands-on.

Another interesting part was working with APIs and web scraping. I learned how to collect data from websites using the requests library and extract useful information with BeautifulSoup. These lessons were simple but very practical for real data projects.

The course also covered a few more topics like JSON, data import and export, and basic automation. The examples were straightforward, showing how Python can be used in different data tasks.

If I had to mention one thing, it would be the length. Since the course is detailed, it takes time to finish, but the pace is manageable if you stay consistent.

Overall, this course built a strong foundation in Python. It helped me understand how to write clean code, use the right libraries, and prepare data for analysis or application development.

Course 5: Developing AI Applications with Python and Flask

The fifth course, Developing AI Applications with Python and Flask, was one of the most practical courses in the program. It took me around 11 hours to finish and focused on using Python to build and deploy web applications.

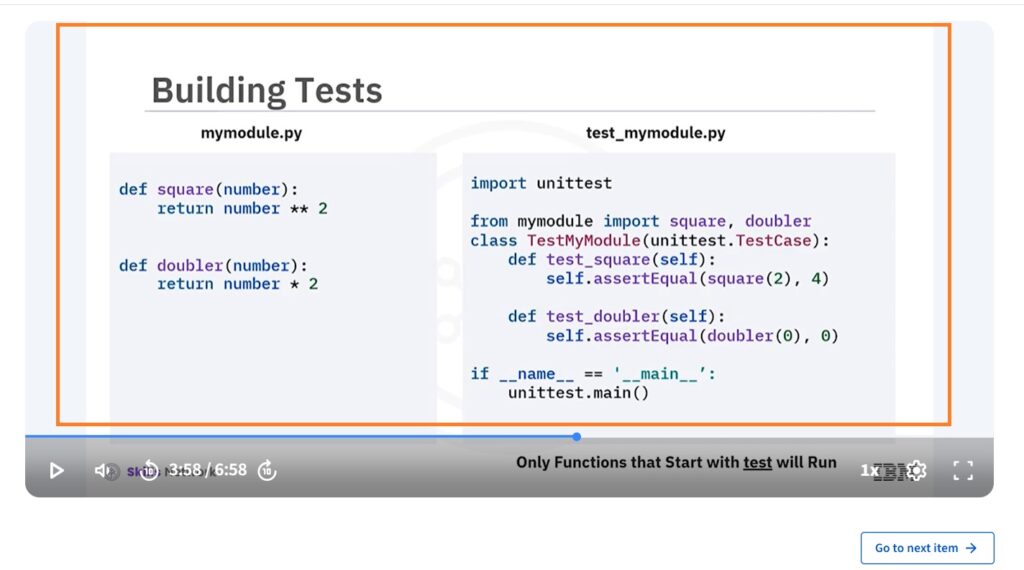

The course began by explaining the application development process in a very simple way. It covered each step from planning and writing code to testing and deployment. This gave me a clear understanding of how real software projects are managed from start to end.

I learned how to create Python modules, write unit tests, and follow PEP8 coding standards. These lessons helped me write cleaner and more organized code. The short exercises made it easy to apply what I learned immediately.

The main part of the course was about Flask, a framework for creating web applications. I learned how to build routes, handle user requests, manage errors, and perform CRUD operations. Building a small web app helped me see how everything connects inside a real project.

Later, the course showed how to connect a Python application with external APIs and host it on a web server. These lessons were simple and gave me a basic understanding of deployment and integration.

The section on testing and debugging was also useful. It taught me how to test my application and fix small issues before deployment.

If I had to mention one area for improvement, it would be that the deployment part could have included more examples. Even then, the instructions were clear enough to understand the overall process.

Overall, this course helped me apply Python to real development work. It brought together everything I had learned so far and showed me how to turn code into a working web application.

Course 6: Building Generative AI-Powered Applications with Python

The sixth course, Building Generative AI-Powered Applications with Python, was one of the most useful parts of the program. It took me around 14 hours to complete and focused on building real projects using Python.

The course started by explaining large language models, speech technologies, and tools like IBM watsonx and Hugging Face. The lessons were easy to follow and helped me understand how these tools work together in application development.

After that, I learned how to build chatbots and applications using Python and retrieval-augmented generation. This part was very practical. It showed how to connect data sources and make the system respond more accurately to user input.

The course also included lessons on speech-to-text and text-to-speech. I learned how to use these features to add voice interaction to an application. It was interesting to see how both audio and text could work together inside one project.

Later, the focus moved to web application development using Flask and Gradio. The course also introduced some simple front-end tools like HTML, CSS, and JavaScript to build basic user interfaces. I found this part helpful because it showed how the backend and frontend connect to form a complete application.

Each section included small coding tasks that made the topics easier to understand. The examples were straightforward and showed how to use different tools in a clear way.

If I had to mention one thing that could be better, it would be adding more examples for connecting different frameworks. Some parts moved quickly, especially for learners new to development.

Overall, this course tied everything together. It helped me see how Python, Flask, and model-based systems work in real applications. It was a solid step toward building my own working projects.

Course 7: Data Analysis with Python

The seventh course, Data Analysis with Python, helped me build confidence in working with real data. It took me around 16 hours to complete and focused on how to clean, analyze, and understand data step by step.

The course started with lessons on data cleaning and preparation. I learned how to handle missing values, correct formatting errors, and organize data properly. This part was very practical because real data is rarely clean, and these techniques made it easier to prepare datasets for analysis.

Then came exploratory data analysis using Pandas, NumPy, and SciPy. I worked with real datasets to find patterns and insights. These exercises showed me how to study data closely before moving to any kind of modeling.

I also learned how to manage data with dataframes. This included sorting, grouping, and filtering information to make it easier to understand. The visualizations with Matplotlib were simple but helped me see what the data was actually telling.

Later, the course introduced regression models using Scikit-learn. This part explained how to build and test models for prediction. It gave me a clear idea of how data can support real decisions when used correctly.

The examples were practical, and each section had small coding activities that made the topics clear. I liked that everything was explained in plain language and connected to real use cases.

If I had to point out one thing, it would be that some parts of regression could have included more background explanation. Still, the exercises were good enough to understand how things work.

Overall, this course made me comfortable with the full data analysis process. It showed me how to clean data, explore it properly, and build simple models using Python.

Course 8: Machine Learning with Python

The eighth course, Machine Learning with Python, helped me understand how real machine learning works in practice. It took me about 20 hours to complete and focused on using Python to apply different learning methods.

The course started with an overview of supervised and unsupervised learning. The explanations were simple and showed where each method is used. It also introduced basic tools and workflows that form the core of machine learning projects.

After that, I learned how to use Scikit-learn to apply algorithms such as regression, classification, clustering, and dimensionality reduction. Each topic had small coding tasks that helped me see how the methods work on real data.

The next part focused on model evaluation. I learned how to check a model’s accuracy, measure errors, and validate results. This helped me understand how to know when a model is performing well and what to adjust when it is not.

I also worked on small projects that combined everything into one process. These exercises showed how to prepare data, train models, and test results. It gave me a clear picture of how end-to-end projects are built.

The lessons were well-paced, though some topics like clustering and dimensionality reduction could have used more explanation. Still, the examples were enough to understand the main ideas.

Overall, this course gave me a strong base in machine learning. It taught me how to use Python to build, test, and improve models in a structured way.

Course 9: Introduction to Deep Learning and Neural Networks with Keras

The ninth course, Introduction to Deep Learning and Neural Networks with Keras, helped me understand how neural networks work in practice. It took me around 10 hours to complete and focused on the basics of building and training models.

The course began with the core ideas of deep learning. It explained what neurons are, how layers are connected, and how networks learn from data. The lessons were short and clear, which made the concepts easy to understand.

Next, I learned how to use the Keras library to build simple models for regression and classification. Each exercise guided me through the process of creating, training, and testing a model. It helped me see how different parameters affect results.

The course also explained some common challenges in training deep networks, such as overfitting and data quality issues. The examples showed how to improve model performance by adjusting settings and testing different options.

Later, I was introduced to CNNs, RNNs, and transformers. These topics were presented at a basic level but gave me a good idea of how deep learning is used in image and text tasks.

The coding activities were simple but useful. They showed how theory turns into working code. Even though this course was shorter than some of the others, it covered all the main points I needed to get started.

If I had to mention one thing, it would be that the advanced parts could use a little more depth. Still, it was enough to build a solid foundation.

Overall, this course gave me a clear understanding of how neural networks are structured and how to create them using Keras. It made complex topics easy to follow and helped me gain confidence in applying deep learning to small projects.

Course 10: Generative AI and LLMs – Architecture and Data Preparation

The tenth course, Generative AI and LLMs: Architecture and Data Preparation, helped me understand how different model structures work and how to prepare data for them. It took me around 5 hours to finish and focused on the technical side of working with text data.

The course started with an overview of RNNs, transformers, VAEs, GANs, and diffusion models. Each type was explained with short examples that made it easy to see how they differ and where each one is used.

Then, I learned about well-known language models such as GPT, BERT, BART, and T5. The lessons showed how these models are used for common text tasks like translation, summarization, and question answering. It gave me a good picture of how language models are applied in practice.

Next came the part on data preparation. I learned how to clean and prepare text using tokenization. The course used tools like NLTK, spaCy, and BertTokenizer to turn words into a format models can understand. This step made me realize how important good preprocessing is before training any model.

I also built a data loader in PyTorch. It handled tokenization, numericalization, and padding for text datasets. This exercise was detailed but clear and showed how data moves into a model during training.

The lessons were short and direct. I liked how each section focused on one idea at a time.

If I had to point out one thing, the PyTorch part could have included a bit more explanation for beginners. Still, with some practice, it was easy to follow.

Overall, this course gave me a strong understanding of how to prepare and manage data for large language models. It connected model concepts with the actual coding steps needed to handle text properly.

Course 11: Gen AI Foundational Models for NLP and Language Understanding

The eleventh course, Gen AI Foundational Models for NLP and Language Understanding, focused on how text is converted into numbers for language processing. It took me around 9 hours to finish and covered several basic and practical methods.

The course started with one-hot encoding, bag-of-words, and embeddings. These methods were explained clearly, showing how they turn text into numerical data. The examples were simple and helped me understand how models use numbers to find meaning in words.

Then, I learned to build Word2Vec models using CBOW and Skip-gram. These methods showed how words that appear in similar contexts end up close to each other in vector space. It was easy to see how this approach improves how text data is represented.

Next, the course introduced language models using N-Grams and small neural networks. I learned how these models predict words in a sentence and how simple patterns can form the base of more advanced systems.

After that, I worked on sequence-to-sequence models using encoder–decoder RNNs. This part focused on translation and text transformation tasks. The coding exercise helped me see how inputs and outputs are linked through the model.

The lessons were short and focused. Each topic built on what I had learned before, which made it easier to follow.

If I had to mention one thing, it would be that the RNN section could have used more detail, but it still gave a clear overview.

Overall, this course gave me a solid understanding of how text is processed and represented in machine learning. It also showed how different model types are used for simple language tasks like prediction and translation.

Course 12: Generative AI Language Modeling with Transformers

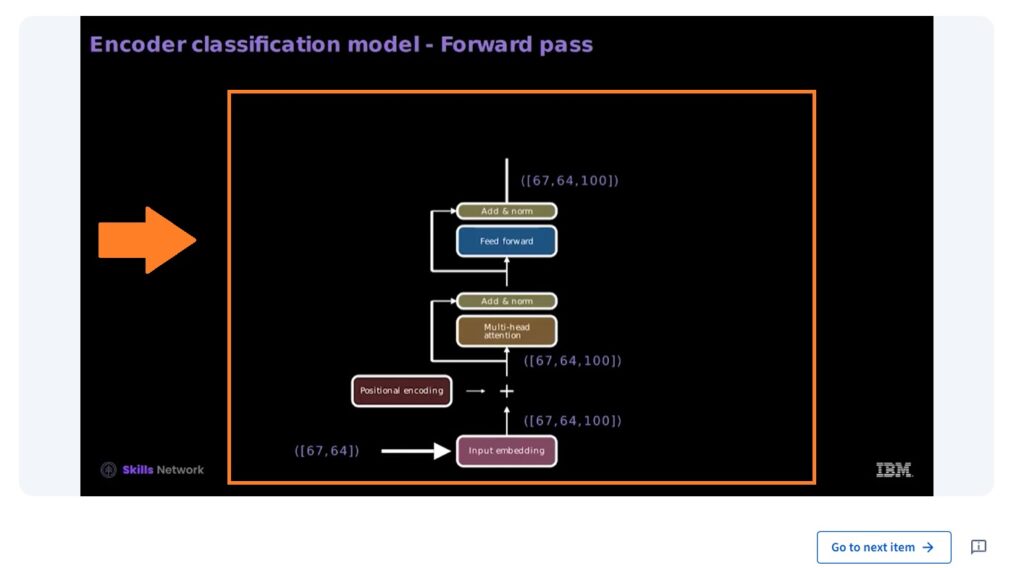

The twelfth course, Generative AI Language Modeling with Transformers, helped me understand how transformer models handle language. It took me around 9 hours to complete and focused on building a clear understanding of attention and model structure.

The course started with the attention mechanism. It showed how attention helps a model focus on the right words in a sentence to understand meaning. The examples were simple and made the idea easy to follow.

Then, the lessons explained the difference between decoder-based models like GPT and encoder-based models like BERT. The comparison was straightforward and showed how each model works for different language tasks.

Next, I worked on building key parts of a transformer using PyTorch. I learned how positional encoding, attention, and masking fit together inside a model. The exercises were short but practical and helped me see how each part supports the overall structure.

Later, I used transformer models for small projects such as text classification and translation. The lessons used PyTorch and Hugging Face to apply pre-trained models to real examples.

The coding parts were slightly advanced, but the steps were clear and easy to repeat. With a little patience, the results were rewarding to see.

If I had to mention one thing, it would be that the concepts moved fast at times. Still, the examples were enough to make the process understandable.

Overall, this course gave me a solid understanding of how transformers process text. It showed how attention works in practice and how modern models are used for real-world language tasks.

Course 13: Generative AI Engineering and Fine-Tuning Transformers

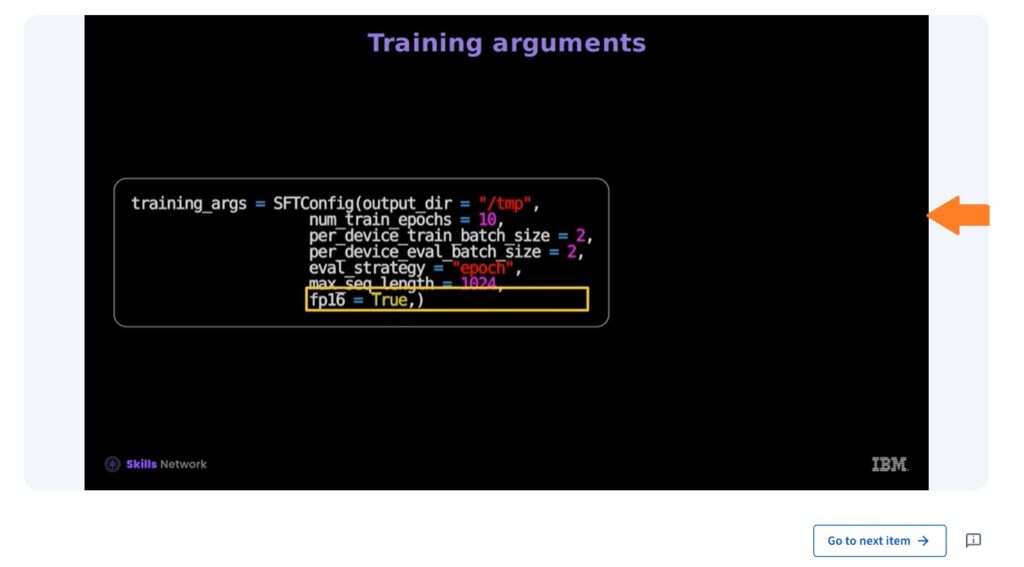

The thirteenth course, Generative AI Engineering and Fine-Tuning Transformers, focused on how to adjust large language models for specific tasks. It took me around 8 hours to complete and was more practical than theoretical.

The course began with an overview of transformer-based models and how they can be reused for new projects. It explained how fine-tuning helps adapt an existing model instead of building one from the beginning. This made the process feel more manageable and efficient.

Next, I learned about parameter-efficient fine-tuning (PEFT) using methods like LoRA and QLoRA. These approaches reduce the amount of training needed while keeping good results. The examples were simple and helped me understand how to use these methods in small experiments.

Then, the lessons showed how to load models, run inference, and train them using PyTorch and Hugging Face. This part was clear and easy to follow. It covered each step needed to prepare, test, and fine-tune a model properly.

I also worked on short exercises that used pre-trained models for language tasks such as classification and summarization. It was useful to see how only a few changes in training can make a model perform a new job well.

The lessons were short and focused. The instructions were clear and stayed practical from start to finish.

If I had to mention one thing, the explanations for LoRA and QLoRA could have included a few more examples. Still, the exercises gave a good idea of how they work.

Overall, this course showed me how to fine-tune large models in an efficient way. It helped me understand how to use existing tools to build working language applications without heavy training requirements.

Course 14: Generative AI Advanced Fine-Tuning for LLMs

The fourteenth course, Generative AI Advanced Fine-Tuning for LLMs, focused on improving large language models through advanced fine-tuning methods. It took me around 9 hours to complete and continued naturally from the earlier fine-tuning course.

The course started with instruction tuning and reward modeling. I learned how these methods adjust a model’s output to follow instructions more accurately. The lessons used Hugging Face tools to show how feedback can be used to improve responses.

Next, I studied direct preference optimization (DPO). This section explained how to define preferences and apply them to fine-tune model behavior. The examples showed how to set up the process and use the partition function to find better outputs.

The following part covered proximal policy optimization (PPO). I learned how to build scoring functions, tokenize data, and use these steps to fine-tune models. The coding examples made it clear how each part connects.

Each topic was short and focused on showing the process rather than long explanations. It was easy to follow along and test everything using the examples provided.

Some sections, especially those involving reinforcement learning, moved a bit fast. Still, the material was clear enough to understand with practice.

Overall, this course explained how to refine models using structured feedback and small adjustments. It gave me a clear idea of how advanced fine-tuning is done in real projects without needing full retraining.

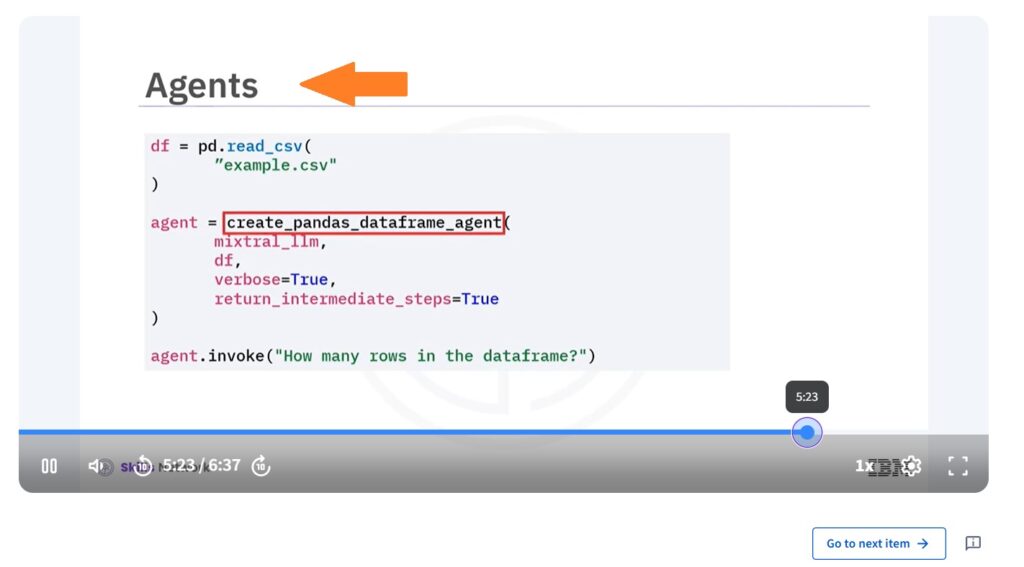

Course 15: Fundamentals of AI Agents Using RAG and LangChain

The fifteenth course, Fundamentals of AI Agents Using RAG and LangChain, focused on building simple and functional AI agents. It took me around 7 hours to complete and was practical from start to finish.

The course started with an introduction to RAG (Retrieval-Augmented Generation) and LangChain. It explained how these tools help connect models with external information. The examples were clear and showed how data retrieval improves model responses.

Next, I learned about in-context learning and prompt design. This part focused on how to write better prompts and structure them to get useful outputs. The small exercises helped me understand how prompt wording changes the final result.

Then, the lessons introduced key parts of LangChain, such as tools, components, chat models, chains, and agents. Each topic had a short code example that made it easier to see how everything fits together.

Later, I worked on a small project that combined RAG, PyTorch, Hugging Face, LLMs, and LangChain. It showed how to connect different frameworks to build a working application.

The course was short but well-paced. Each section explained one idea at a time, which made it easy to follow.

If I had to mention one thing, a few examples could have used more explanation for beginners, especially in the integration part. Even so, the steps were simple enough to repeat.

Overall, this course gave me a clear understanding of how retrieval-based systems work and how LangChain can be used to build small, useful applications.

Course 16: Project – Generative AI Applications with RAG and LangChain

The last course, Project: Generative AI Applications with RAG and LangChain, was where I finally built my own project. It took me around 9 hours to complete and helped me apply everything I had learned throughout the program.

I started by creating a vector database to store document embeddings. I added documents, generated embeddings, and built a retriever that fetched relevant information when a user entered a question. Setting it up gave me a clear understanding of how data is stored and searched in real applications.

After that, I built a question-answering bot using LangChain and a large language model. I connected it with the retriever so the bot could pull the right information before responding. Then, I designed a simple Gradio interface so users could interact with the bot directly. Seeing it respond with accurate answers was a rewarding moment.

I faced a few challenges while setting up dependencies and managing responses, but each step helped me understand the workflow better. Once everything started running smoothly, it felt like a complete product — not just a guided example.

By the end of the course, I had a working Generative AI application that used RAG and LangChain to answer questions from custom data. Building it myself made all the earlier lessons come together in a practical way.

If you want to build a similar project, you can enroll in the IBM Generative AI Engineering Professional Certificate here

Final Summary: My Experience with the IBM Generative AI Engineering Professional Certificate

After completing all 16 courses of the program, this IBM Generative AI Engineering Professional Certificate review helped me look back at what worked well and what could have been better. It covered everything from basic AI concepts and Python to transformers, fine-tuning, and building a working project using RAG and LangChain.

What I Liked

- Practical Work

Each course included labs and small coding exercises. I learned better by doing, not just watching. The final project, where I built a working question-answering app, tied everything together. - Step-by-Step Structure

The order of the courses made sense. It started with the basics and slowly moved to more complex topics like transformers, large models, and RAG. I never felt lost between topics. - Real Tools

The program used the same tools professionals use — Python, Flask, PyTorch, Keras, Hugging Face, and LangChain. Learning them in real tasks made the experience more useful. - Short and Focused Lessons

The lessons were short and to the point. They explained concepts clearly without long or confusing explanations.

What Could Be Better

- Limited Depth in Some Topics

A few courses only gave a surface-level explanation, especially the ones on RNNs and transformers. They introduced ideas but didn’t go deep enough to build full confidence. - Less Coding in Early Courses

The first few modules were heavy on theory and light on coding. More exercises there would have made the start stronger. - Fast-Paced Advanced Courses

The later modules on fine-tuning and transformers moved quickly. It was easy to follow, but beginners might need extra time or outside reading to keep up. - Setup Issues

Some labs took extra time to set up. A few dependencies and library versions needed fixing manually, which slowed things down. - Fewer Real Examples

The program explained technical parts well but could have included more real-world examples from industries using generative AI.

My Honest Take

The program gave me a good foundation in generative AI development. I learned how to prepare data, build models, and deploy applications using real frameworks. It was not too theoretical and not too basic, a balanced mix for someone serious about learning how generative systems work.

The final project was the most valuable part. Building my own retrieval-based chatbot using LangChain, Gradio, and vector databases gave me practical experience I can use in real work.

This certificate is best for people who already know basic Python and want to understand how today’s AI tools fit together. It won’t make you an expert overnight, but it will show you how everything connects in a real workflow.

Who Should Enroll

Overall, this IBM Generative AI Engineering Professional Certificate review shows that the program suits people who want to learn how real generative systems are built and used. It’s a good fit for:

- Students or professionals who want to start a career in AI and prefer learning by doing rather than only theory.

- Developers who already know Python and want to move into building applications that use large language models.

- Data scientists who understand machine learning and now want to work with LLMs, transformers, and modern AI tools.

- Researchers or technical learners who want hands-on experience with frameworks like LangChain, Hugging Face, and PyTorch.

It may not be the right choice for complete beginners who have never coded before. A basic understanding of Python and how data projects work will make the experience smoother and less frustrating.

This program is practical and structured, but it expects you to follow along, code, and troubleshoot on your own. If you enjoy that kind of learning, it’s a strong choice.

Cost and Duration

Cost: Around ₹3,200 per month (about $39 USD) with a Coursera subscription.

Duration: Roughly 6 months if you study around 6 hours a week.

Financial Aid: Available through Coursera for eligible learners.

You can also access this certificate through Coursera Plus, which costs around ₹48,000 per year (about $399 USD). It’s a better choice if you plan to take multiple IBM or AI courses since it covers all of them under one plan.

You can start your IBM Generative AI Engineering Professional Certificate here with a Coursera subscription for about ₹3,200 or $39 per month.

Career Value

Generative AI is growing fast. IBM references a projected 46% annual growth rate in the GenAI market through 2030, according to Statista. This shows that the demand for skilled professionals in this area will continue to rise.

Finishing this certificate adds real weight to your LinkedIn profile and portfolio. It gives you hands-on projects that show your ability to build and fine-tune models, not just understand theory.

After completing the program, you can apply for roles such as:

- Generative AI Engineer

- Prompt Engineer

- AI Developer

- Machine Learning Engineer

- NLP Engineer

The projects you complete during the course also serve as proof of your practical work. Having something real to show during interviews makes a strong impression.

Comparison: IBM vs Others

| Program | Provider | Duration | Focus | Summary |

|---|---|---|---|---|

| IBM Generative AI Engineering | IBM | 6 months | Building complete GenAI applications | Best for practical, hands-on learning |

| Google AI Essentials | 2 months | Basics of AI and its uses | Good starting point but limited coding practice | |

| DeepLearning.AI Generative AI Specialization | Andrew Ng | 1 month | Key LLM concepts and workflows | Strong on theory but short in practical depth |

The IBM program stands out for its project-based structure and step-by-step learning.

If you want to understand how to build and deploy real applications, IBM offers the most complete experience among the three.

Is It Worth It in 2026?

Yes. This certificate is worth it if your goal is to learn how to build working generative AI projects from start to finish.

The IBM Generative AI Engineering Professional Certificate takes effort and consistency. It is not a quick course, but it teaches real, practical skills. By the end, you will know how to design, train, and deploy functional applications using the same tools used in the industry.

In 2026, companies are focusing more on applying AI in products and workflows. They need people who can build and manage these systems, not just understand the theory. This program helps you learn those skills step by step.

If you already know some Python and want to move into building generative applications, this program is a good choice. It gives you real project experience that you can add to your portfolio and share during interviews.

Some parts feel technical and may take extra effort to follow, but that is also what makes it valuable. It gives you a complete view of how modern systems are created and deployed.

Based on my experience, this IBM Generative AI Engineering Professional Certificate is worth it if you want to build real generative AI projects in 2026 and beyond.

Conclusion

After completing this program, my IBM Generative AI Engineering Professional Certificate review comes down to one clear point. It delivers real learning through practice, not just theory. Every course builds on the previous one until you reach the final project, where you create a complete working application.

This IBM Generative AI Engineering Professional Certificate review shows that the program is well-structured for anyone who wants to move from learning to doing. It focuses on real projects, clear explanations, and tools that are used in the field every day.

For this IBM Generative AI Engineering Professional Certificate review, I also considered how it compares with other options. While some courses focus on short lessons or limited coding, IBM’s program takes the time to teach every step from basics to deployment. That makes it more practical and lasting.

From my IBM Generative AI Engineering Professional Certificate review, one thing stood out. The final project is where everything connects. You apply what you learned about Python, LangChain, and RAG to build something you can actually show to others. That hands-on experience is what gives this program its real value.

In short, this IBM Generative AI Engineering Professional Certificate review reflects my honest experience. It is a good choice for learners who already know basic Python and want to move into building real applications. It takes time and focus, but what you gain is practical skill and confidence.

If you want structured guidance, real coding work, and a project to showcase, my IBM Generative AI Engineering Professional Certificate review would say it is worth taking in 2026 and beyond.

You can check out the IBM Generative AI Engineering Professional Certificate here and start your own learning journey today.

Happy Learning!

You May Also Be Interested In

Best Resources to Learn Computer Vision (YouTube, Tutorials, Courses, Books, etc.)- 2026

Best Certification Courses for Artificial Intelligence- Beginner to Advanced

Best Natural Language Processing Courses Online to Become an Expert

Best Artificial Intelligence Courses for Healthcare You Should Know in 2026

What is Natural Language Processing? A Complete and Easy Guide

Best Books for Natural Language Processing You Should Read

Augmented Reality Vs Virtual Reality: Differences You Need To Know!

What are Artificial Intelligence Examples? Real-World Examples

Thank YOU!

Explore more about Artificial Intelligence.

Thought of the Day…

‘ It’s what you learn after you know it all that counts.’

– John Wooden

Written By Aqsa Zafar

Aqsa Zafar is a Ph.D. scholar in Machine Learning at Dayananda Sagar University, specializing in Natural Language Processing and Deep Learning. She has published research in AI applications for mental health and actively shares insights on data science, machine learning, and generative AI through MLTUT. With a strong background in computer science (B.Tech and M.Tech), Aqsa combines academic expertise with practical experience to help learners and professionals understand and apply AI in real-world scenarios.