Hello everyone! I’m Aqsa Zafar, and today I want to share my honest and detailed review of Udacity’s Large Language Models Nanodegree.

I know some of you might be wondering if this program is really worth your time and money. Or maybe you’re curious about learning LLMs but don’t know where to start. If that sounds like you, this review is for you.

I’m going to walk you through everything I went through — what I learned, what I enjoyed, where I struggled, and who I think would benefit the most from this program.

I’ll also share some personal tips that I wish I had known before starting, so you can make the most of the experience if you decide to enroll.

So, let’s sit down and go through this together. I’ll keep it real, straightforward, and as helpful as possible.

Now, without further ado, let’s get started-

My Review of Udacity’s Large Language Models Nanodegree

Why I Took This Nanodegree

As someone who has always been passionate about AI, machine learning, and natural language processing, I wanted to take my understanding of large language models (LLMs) further. I didn’t want to just use tools like ChatGPT or GPT APIs — I wanted to understand how they work.

I specifically wanted to learn:

- How LLMs work on the inside

- How to fine-tune them on my own datasets

- How to create real-world applications, like chatbots, that people can actually use

When I found this nanodegree, it felt like a good match. It offered hands-on projects, detailed lessons, and practical skills I could apply in real situations. That’s what motivated me to sign up.

What’s Inside the Nanodegree?

Let me walk you through how the program is set up.

The nanodegree includes:

- 1 final project where you get to build your own custom chatbot

- 5 main lessons

This is a quick look at what the journey looks like:

Lesson 1: Introduction to LLMs

This first lesson is all about giving you a strong foundation in how large language models work. It’s meant to help you understand not just what LLMs do but how they are structured and why they work the way they do.

In this lesson, you will learn:

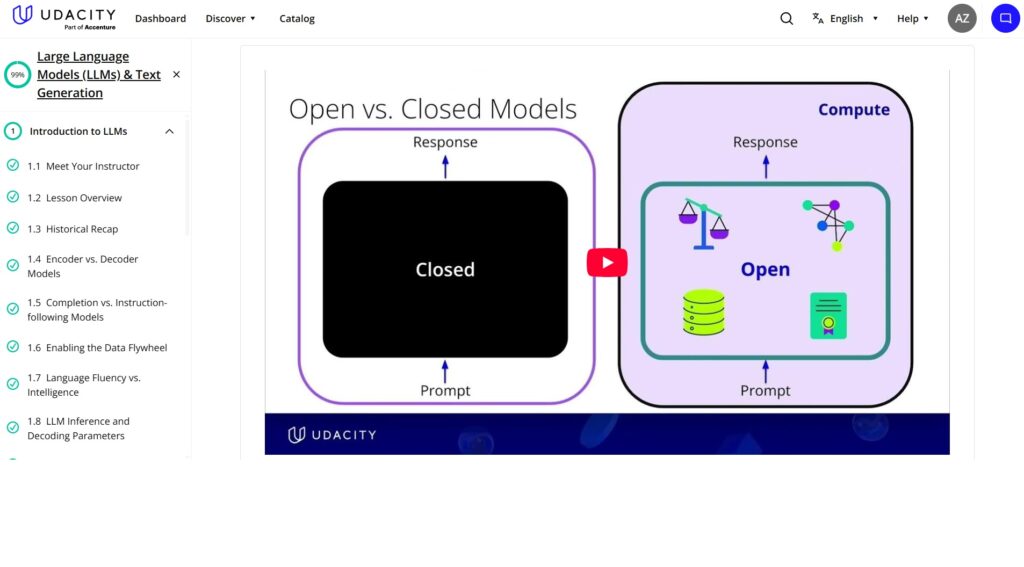

- Different types of LLMs, like encoder models, decoder models, and instruction-following models

- What inference means and how decoding parameters affect the output

- The basics of prompt engineering — learning how to carefully craft prompts to get the best possible answers from a model

- Hands-on demos, including things like next-token prediction and chain-of-thought prompting, so you can actually see these concepts in action

What I liked about this lesson:

The instructor did a great job of explaining complex topics in a clear and easy-to-understand way. I also appreciated that we didn’t just stay in theory — early on, you set up an OpenAI account and start experimenting. That made the learning feel practical and engaging right from the start.

Lesson 2: NLP Fundamentals

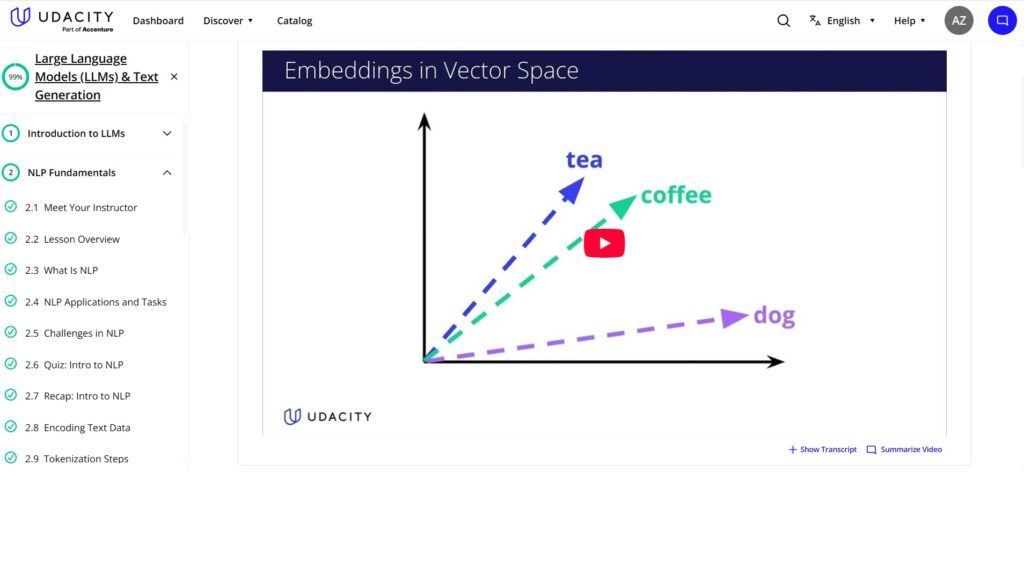

In this lesson, you learn the basic ideas behind natural language processing (NLP), which are important to understand how language models work. It helps you see how text is broken down and prepared so the models can work with it.

You will study:

- The basics like tokenization, embeddings, and how text is encoded so that models can understand it

- How models handle sequences of words or tokens to make sense of sentences

- How autoregressive models create text one word (or token) at a time

- Different sampling methods that decide how the model picks the next word when it’s generating text

What I liked:

This lesson was a good refresher for me. Even if you’ve studied NLP before, the hands-on exercises using Hugging Face Tokenizers give you useful practical experience with real tools.

What was difficult:

If you’re new to NLP, some of the ideas might feel a bit confusing at first. You may need to stop and read some background material to understand everything better. That’s completely normal and part of learning something new.

Lesson 3: Transformers and Attention Mechanisms

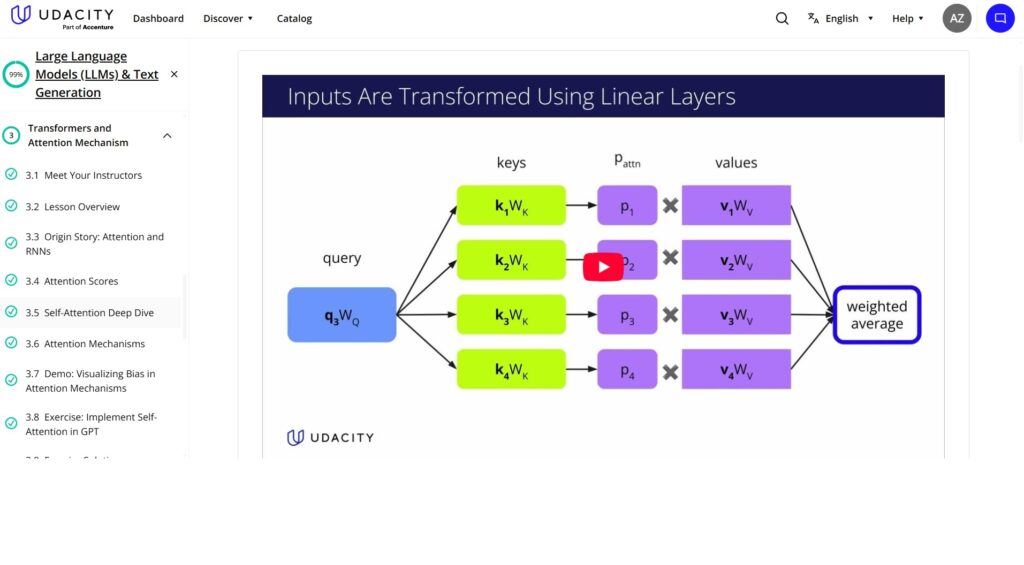

This lesson focuses on the core ideas behind large language models. You learn what makes them work so well and why they’ve become the standard in the field.

You’ll learn about:

- How self-attention works and why it’s important

- Why transformers replaced older models like RNNs

- The basic structure of GPT models

- Some of the latest research topics, including optimization, safety, and ethics

What I liked:

The visual explanations of how attention works were really helpful. I also enjoyed the exercises where you get to build your own small GPT model — that made the ideas much clearer.

What was difficult:

This part is pretty heavy with information. To keep up with the coding exercises, you’ll need to have a good handle on Python and PyTorch. Without that, it might get frustrating.

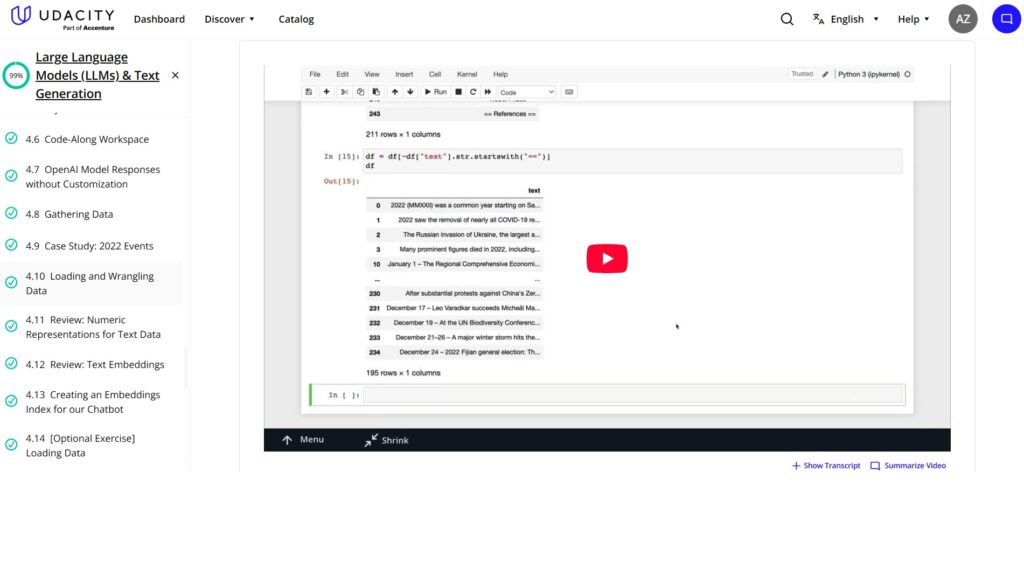

Lesson 4: Retrieval Augmented Generation (RAG)

In this lesson, you learn how to make large language models smarter by combining them with outside data. It shows you how to bring in extra information so the model can give better, more accurate answers.

You’ll learn how to:

- Build an embeddings index to organize data

- Use semantic search to find relevant information

- Add context to prompts so the model understands what you’re asking better

- Handle token limits carefully to make sure everything fits

What I liked:

I really enjoyed learning how real-world question-and-answer bots work. You’re not just sending basic requests to an API — you’re creating smart, context-aware queries that help the model give better responses.

What was tricky:

The part about semantic search, especially using cosine similarity, took me a few tries to fully understand. It wasn’t easy at first, but going over it a couple of times helped a lot.

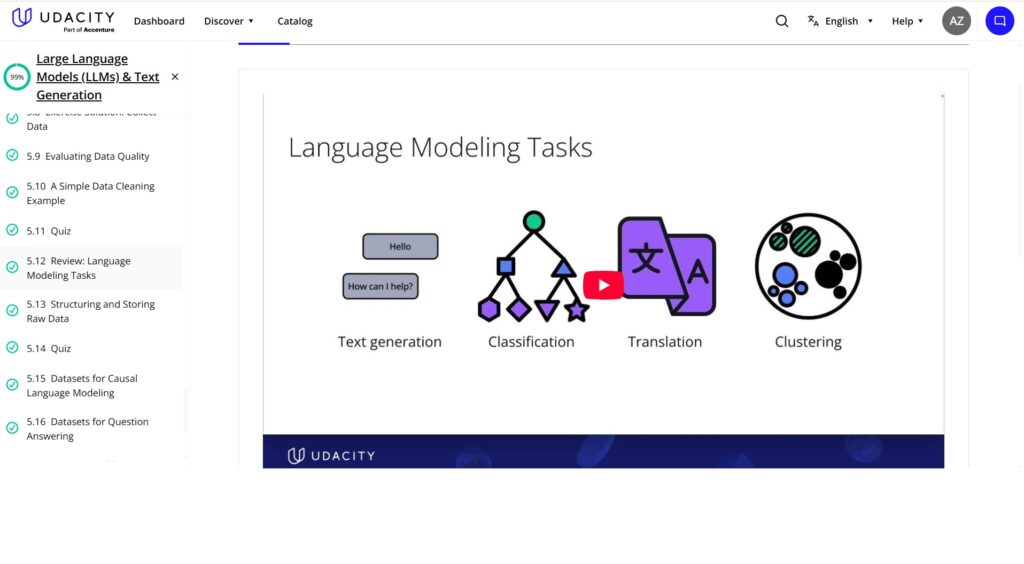

Lesson 5: Build Custom Datasets for LLMs

This lesson focuses on getting your data ready so you can train or fine-tune language models effectively. It’s all about collecting, cleaning, and organizing the right information.

You learn how to:

- Do web scraping using tools like BeautifulSoup and Selenium

- Clean and structure the data so it’s useful

- Check the quality of your dataset to make sure it’s good enough

- Create datasets tailored for specific tasks, like question answering

What I liked:

The step-by-step guidance on scraping and cleaning was really helpful. These are practical skills you’ll definitely need if you want to fine-tune models yourself later.

What was tricky:

You have to be careful with web scraping because it’s easy to get blocked or end up with messy data if you’re not cautious. So, it requires patience and attention.

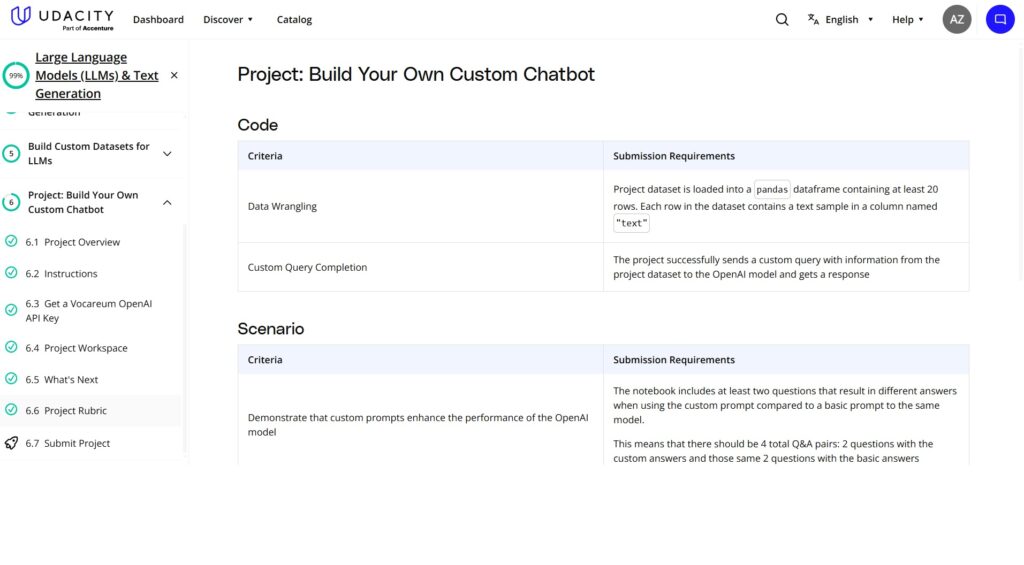

Final Project: Build Your Own Custom Chatbot

This is the big finish where you get to put everything you’ve learned into practice.

You’ll:

- Create or gather a dataset to train your bot

- Set up embeddings to organize your data

- Write prompts that help the bot understand and respond well

- Build a custom chatbot powered by OpenAI’s models

What I liked:

This project really helped me bring all the lessons together. I built a chatbot focused on a specific topic, and seeing it answer real questions was incredibly rewarding.

What I wished for:

It would be great to have more detailed instructions or templates because you have to figure out a lot on your own, which can be a bit challenging.

Key Skills I Gained

By the time I finished, I had really improved in several important areas:

- Prompt engineering — how to ask models the right way

- A solid understanding of transformers and attention mechanisms

- Using semantic search and cosine similarity to find relevant info

- How to build embeddings to organize data

- Web scraping and cleaning datasets to get good-quality data

- Working with APIs from OpenAI, Together AI, and Hugging Face

This program is very hands-on and practical. You don’t just sit and watch videos — you actually build things as you learn.

What I Loved

- The lessons were led by experts and explained clearly. Everything was well organized and easy to follow.

- I got to work with real-world tools like OpenAI API, Hugging Face, PyTorch, and Pandas.

- The course had plenty of hands-on exercises, so it wasn’t just about theory — I spent a lot of time coding.

- The topics were very up-to-date, covering things like Retrieval Augmented Generation (RAG), transformers, and ethical AI.

- The final project was a great way to put it all together by building a complete chatbot from start to finish.

What Could Be Improved

- The course moves pretty quickly, so if you’re new to NLP or Python, it might feel a bit overwhelming at times.

- I wished there was more help with projects — like starter code or clearer guidelines — because some parts felt like you had to figure a lot out on your own.

- The prerequisites are real: you need to know intermediate Python, PyTorch, and have a basic understanding of machine learning and deep learning. Without that, it can be hard to keep up.

- The course doesn’t cover heavy fine-tuning of models. It focuses more on prompting and Retrieval Augmented Generation (RAG) rather than deep customization.

Who Should Take This Nanodegree?

- If you have intermediate Python skills and some experience with deep learning, this course is a great fit.

- Data scientists or machine learning engineers who want to learn more about large language models (LLMs) will find it useful.

- Developers interested in building custom chatbots or apps powered by LLMs will benefit a lot.

- Anyone looking for hands-on experience with APIs like OpenAI or Hugging Face will get good practice here.

If you’re completely new to Python, deep learning, or NLP, I’d suggest getting comfortable with the basics before starting this program.

Pricing & Support

The course costs about $399 per month, but keep an eye out for discounts that can make it more affordable.

Sometimes, Udacity also offers scholarships, especially for underrepresented groups, so it’s worth checking if you qualify.

When you join, you’ll get support from technical mentors who can help with questions, access to student forums where you can connect with others, and career services to help you move forward after the course.

My Personal Takeaways

Taking this Nanodegree was a solid learning experience for me. It helped me understand large language models much better—not just how to use them, but how they actually work under the hood.

I liked that the course was very hands-on. Doing projects and coding exercises made the lessons feel real and practical. Building my own chatbot was definitely the most rewarding part because it pulled everything together.

That said, the course moves pretty fast and expects you to already know some Python, deep learning, and NLP basics. There were moments when I had to pause and look things up, especially in the coding sections. So, it might feel a bit tough if you’re just starting out.

Overall, if you have some background and want to go beyond just using tools like ChatGPT, this Nanodegree can teach you useful skills—like prompt engineering, working with APIs, and preparing data—that you’ll actually use in real projects.

But if you’re new to these topics, it might be better to get comfortable with the basics first before jumping in.

So, it’s a good program, but it’s not for everyone—and that’s okay. It really depends on where you’re starting from and what you want to get out of it.

Is This Nanodegree Worth Your Time and Money?

Whether this Nanodegree is right for you really depends on your background and what you want to get out of it.

If you already know some Python and have a basic understanding of machine learning or NLP, this program can give you practical skills and hands-on experience working with large language models. You’ll get to build real projects and use tools like OpenAI’s API and Hugging Face, which is great if you want to create your own chatbots or LLM-powered apps.

On the other hand, if you’re new to these topics, the course might feel a bit fast and sometimes confusing. There’s not a lot of hand-holding, so you may find yourself needing to pause and learn some things on your own. Also, it doesn’t go deep into fine-tuning models, so if that’s what you’re after, it might not cover everything you want.

In the end, this Nanodegree can be a good fit if you have some experience and want a practical way to level up your LLM skills. But if you’re just starting out, you might want to build a stronger foundation first before jumping in.

So, it’s worth thinking about where you are right now and what your goals are before deciding.

Conclusion

That wraps up My Review of Udacity’s Large Language Models Nanodegree. Overall, it’s a solid program if you’re ready to get hands-on with LLMs and already have some background in Python and machine learning.

In My Review of Udacity’s Large Language Models Nanodegree, I’ve shared both the strengths and the challenges of the course, so you can decide if it fits where you are in your learning journey.

If you want practical experience building real-world AI applications and understanding how large language models work under the hood, then My Review of Udacity’s Large Language Models Nanodegree suggests this could be a good step for you.

I hope this helps you make an informed choice!

You May Also Be Interested In

Best Resources to Learn Computer Vision (YouTube, Tutorials, Courses, Books, etc.)- 2026

Best Certification Courses for Artificial Intelligence- Beginner to Advanced

Best Natural Language Processing Courses Online to Become an Expert

Best Artificial Intelligence Courses for Healthcare You Should Know in 2026

What is Natural Language Processing? A Complete and Easy Guide

Best Books for Natural Language Processing You Should Read

Augmented Reality Vs Virtual Reality, Differences You Need To Know!

What are Artificial Intelligence Examples? Real-World Examples

Thank YOU!

Explore more about Artificial Intelligence.

Thought of the Day…

‘ It’s what you learn after you know it all that counts.’

– John Wooden

Written By Aqsa Zafar

Aqsa Zafar is a Ph.D. scholar in Machine Learning at Dayananda Sagar University, specializing in Natural Language Processing and Deep Learning. She has published research in AI applications for mental health and actively shares insights on data science, machine learning, and generative AI through MLTUT. With a strong background in computer science (B.Tech and M.Tech), Aqsa combines academic expertise with practical experience to help learners and professionals understand and apply AI in real-world scenarios.